The Ascent of AI in the Cloud-to-Edge Continuum, Part 1

Part 1 of this blog series introduces Edge AI and Cloud AI and finishes with how Wind River’s eLxr Pro spans the two environments with a powerful and versatile Linux foundation.

The rise of AI across the cloud-to-edge continuum has increased the disparity between the processing, storage, and communication capabilities of edge devices and data centers. As artificial intelligence (AI) and machine learning applications become even more data-intensive, data centers are handling more complex processing, while edge devices face challenges in keeping up with the growing computational demands.

The convergence of AI with cloud and edge computing is reshaping how businesses and industries operate. At the forefront of this evolution is the growth of AI orchestration in cloud to edge environments. This emerging paradigm leverages the power of distributed computing and intelligent algorithms to optimize processes, enhance decision-making, and deliver real-time insights closer to where data is generated.

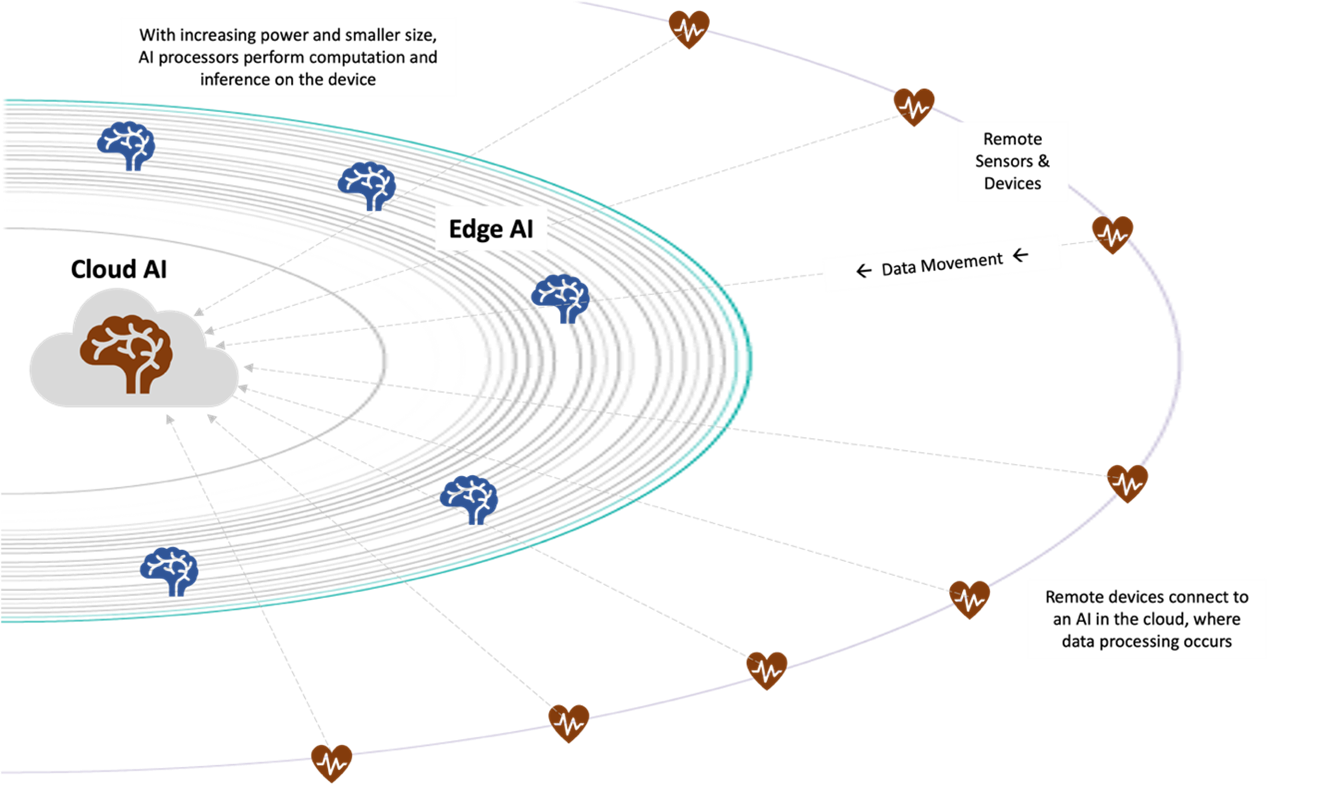

In the ever-evolving landscape of artificial intelligence, two major paradigms have emerged: Edge AI and Cloud AI. Cloud AI refers to the deployment of AI algorithms and models in centralized data centers accessed through the internet. These cloud-based systems are renowned for their massive computational power and storage capabilities, making them ideal for training complex machine learning models. In contrast, Edge AI involves deploying AI algorithms directly on devices or at the "edge" of the network, closer to where data is generated. This approach allows for real-time processing and decision-making, with minimal latency.

Edge AI vs. Cloud AI

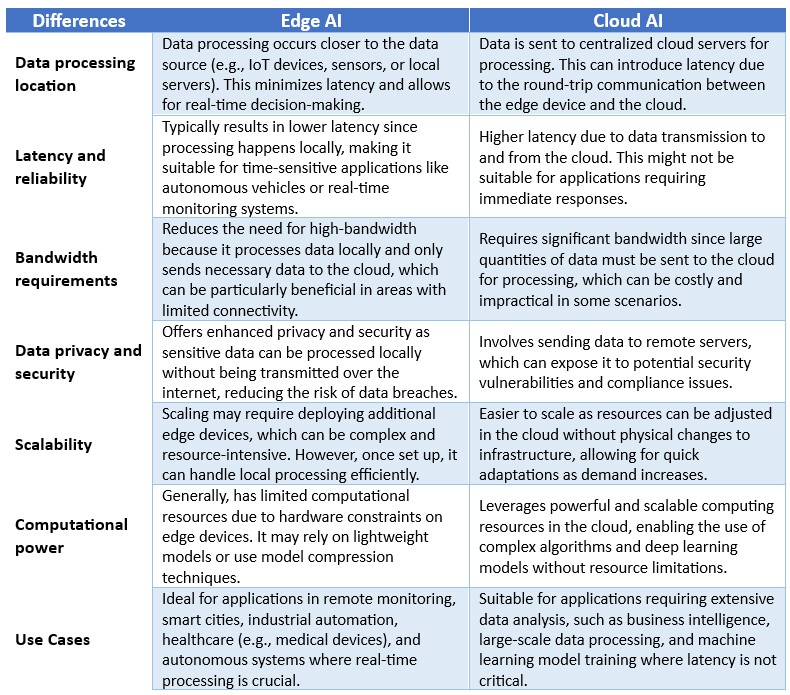

Edge AI and Cloud AI represent two distinct approaches to deploying artificial intelligence, each with its own advantages, use cases, and limitations. Here are the key differences between the two:

Both Edge AI and Cloud AI have unique strengths and can often complement each other in hybrid architectures. Organizations must evaluate their specific needs, such as latency requirements, data volume, privacy concerns, and application criticality, to determine the best approach for their AI deployment.

When considering the deployment of Edge AI and Cloud AI, organizations must weigh various trade-offs associated with each approach. These considerations include cost, reliability, latency, network communications, data privacy, power, and storage.

Cloud AI benefits from scalability and the ability to handle large-scale data processing tasks. However, it is often hindered by latency issues and reliance on robust internet connectivity. Edge AI reduces latency and enhances privacy by keeping data local, but it is limited by the computational and storage constraints of edge devices. Additionally, Edge AI can sometimes require more sophisticated management due to the distributed nature of the devices.

Combining the high-volume data gathering and reliability possibilities edge computing unlocks with the storage capacity and processing power of the cloud enables organizations to quickly and efficiently run their applications and IoT devices. Even better, they can do so without sacrificing valuable analytical data that could help them to improve their goods and services and drive innovation.

Wind River Solutions for AI Workloads

Wind River’s eLxr Pro, versatile enterprise Linux for cloud-to-edge deployments, delivers a foundation for supporting these AI workloads both consistently and optimally. The true value of AI lies in its ability to work together and to integrate into your existing systems and processes. This is what eLxr Pro enables, building on Wind River’s decades of experience in understanding intelligent edge systems.

Wind River's eLxr Pro is a comprehensive edge AI solution designed to streamline the deployment and management of AI workloads at the edge. It offers robust tools for developing, training, and deploying AI models, addressing the unique challenges of edge computing environments.

Learn more about what eLxr Pro can do for you at www.windriver.com/products/elxr-pro.

Part 2 will discuss Edge-Cloud AI workloads across various industries, finishing with how Wind River solutions (eLxr Pro and Wind River Cloud Platform) span the two environments and address the challenges that are surfaced in supporting AI and AI orchestration workloads.

Part 3 will discuss the trade-offs in deploying Edge AI and Cloud AI workloads, finishing with how Wind River solutions (eLxr Pro and Wind River Cloud Platform) span the two environments and address the challenges that are surfaced in supporting AI and AI orchestration.