Benefits of Using OpenStack as a Containerized Service in StarlingX

Using OpenStack as a containerized service in StarlingX offers significant advantages in cloud computing platforms’ performance, lifecycle management, availability, and operational efficiency

Enterprises want distributed environments that run efficiently across multiple locations, such as corporate data centers, remote facilities, and hybrid cloud environments. To succeed, IT teams need oversight and control, including full system visibility, the ability to deploy seamless system upgrades and patches, and meaningful lifecycle management. Plus, to support real-time workloads and applications that simply cannot fail, they need low-latency, deterministic performance and high availability.

The open source cloud computing platform OpenStack can ease the effort of building and managing these large virtualized data centers. OpenStack is a suite of software components that provide the functionality to run cloud platforms; it encompasses everything from virtualizing servers, networks, and other infrastructure to databases as a service, orchestration, container services, and monitoring services. Because it is open source, OpenStack offers flexibility and avoids vendor lock-in, and the industry has found OpenStack valuable for migration from VMware technologies.

Notably, OpenStack is a core component of StarlingX, an open source edge computing platform that integrates components from established open source projects, hardened and optimized for distributed cloud environments. By using OpenStack within platforms such as StarlingX, enterprises can effectively replace traditional virtualization technologies, achieving an adaptable and resilient cloud infrastructure.

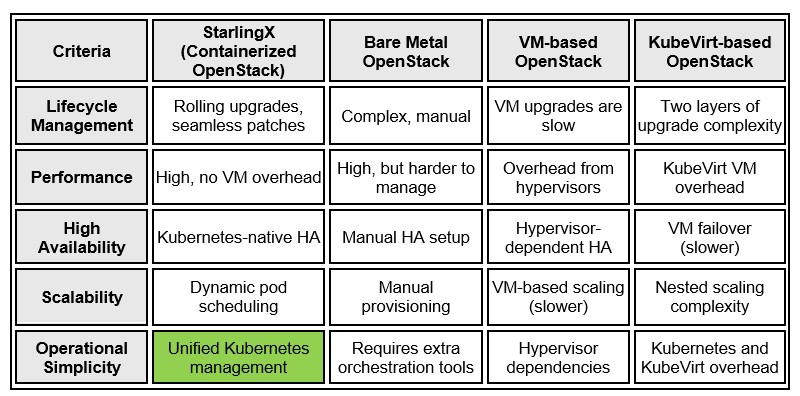

StarlingX’s unique approach is to deploy OpenStack as a containerized service rather than relying on traditional bare metal, virtual machine (VM)–based, or KubeVirt-based methods. Using StarlingX in this guise offers significant advantages in lifecycle management, operational simplicity, performance and resource efficiency, high availability, and scalability — particularly when compared to alternatives such as Red Hat’s Stack on Shift (OpenStack on OpenShift), Canonical’s MAAS/Juju, or traditional VM-based deployments.

Exceptional Lifecycle Management

Establishing a cloud infrastructure is only the first step. Ever after, IT organizations have to keep those systems secure, up to date, and functional. Mass deployment of software, applications, or servers must be provisioned across platforms, environments, and locations — and updated as necessary.

Doing so effectively relies on processes that are repeatable, reliable, automated, unattended, and scheduled. Tools help — or they should.

Alternatives

With cloud infrastructures based on bare metal deployments (such as traditional OpenStack), upgrades require time-consuming manual migration processes or full-system redeployments. Components are tightly coupled, which makes individual service upgrades riskier and more disruptive.

An alternative is VM-based OpenStack deployment — largely, running OpenStack services on VMs — but that leads to additional overhead and complexity. It consumes excessive compute and storage resources. Worse, it’s cumbersome to upgrade VMs, and doing so can introduce inconsistencies between hypervisors and OpenStack services.

VM-based OpenStack on Kubernetes complicates upgrades, as both the Kubernetes and KubeVirt layers need to be maintained separately.

StarlingX

In contrast, with StarlingX’s approach:

- OpenStack runs as containerized services within Kubernetes, so it benefits from containerized lifecycle management.

- Zero-downtime rolling upgrades are possible, with smooth transitions between OpenStack releases using Kubernetes-native orchestration.

- The entire OpenStack control plane can be restarted, upgraded, or scaled independently of the underlying infrastructure.

StarlingX has used this approach for more than five years and thus represents the most mature industry offering utilizing containerized OpenStack.

Operational Simplicity

Much of a cloud administrator’s daily life is monitoring system health and responding appropriately when needed. The IT staff needs consistency and compatibility, with the ability to adjust configurations with the click of a mouse and patch security vulnerabilities in a standard manner.

This is best accomplished with some sort of unified management interface — but such tools vary significantly in how they help tech staff accomplish the oversight.

Alternatives

Bare metal OpenStack, for instance, requires complex deployment tools such as Juju, TripleO, or manual Ansible configurations, and using these tools often involves many manual steps and command-line details. High-availability and monitoring setups require additional work and several pots of coffee to get things right.

To get a system overview and control panel for VM-based OpenStack, administrators need an extra layer of tooling to manage things, along with complex dependencies on hypervisors and storage backends. That increases administrative overhead rather than reducing it.

Managing OpenStack inside VMs is counterintuitive. It introduces multiple levels of orchestration, and the VM overhead slows operations and consumes more server resources, adding to the cost of deployment.

StarlingX

None of these are ideal. Instead, StarlingX:

- Provides a centralized management interface for Kubernetes, OpenStack, and distributed cloud sites

- Has integrated tools for automated system health checks, monitoring, and logging

- Uses Kubernetes-native tools such as Helm and container registries for OpenStack service deployment and upgrades, enabling OpenStack in StarlingX to be a “single button” deployment

Performance and Resource Efficiency

Any cloud infrastructure has to support expected workloads. Applications and services deployed in a cloud environment must meet acceptable standards for responsiveness, scalability, reliability, and overall performance. When response times, throughput rates, resource utilization (CPU, memory, network), and error rates miss their targets, the IT team has to adjust system behavior to ensure that service-level agreements (SLAs) are met and to verify that the system can maintain high performance and availability under typical and peak usage scenarios.

Alternatives

While bare metal deployments can be efficient, they are hard to manage. They require complex service orchestration and manual optimizations. Service isolation is limited, making it difficult to tune scaling and performance.

VM-based OpenStack options encounter performance bottlenecks due to nested virtualization and additional abstraction layers. A common issue is resource overhead from running hypervisors such as KVM or ESXi.

And running OpenStack inside KubeVirt VMs wastes Kubernetes’ scheduling efficiency. That increases the maintenance burden, because operators must manage Kubernetes and KubeVirt as separate infrastructure layers.

StarlingX

In contrast, with StarlingX:

- OpenStack services run as lightweight, containerized workloads directly orchestrated by Kubernetes, which eliminates hypervisor and VM overhead.

- Direct hardware acceleration support (DPDK, SR-IOV, GPU passthrough) ensures low-latency and high-performance networking and compute.

- Kubernetes’ native pod scheduling efficiently places OpenStack services across nodes, which optimizes CPU and memory utilization.

High Availability and Fault Tolerance

Cloud services need a high availability (HA) infrastructure to maintain regular functions and prevent mission-critical services from crashing. Typical approaches include designing a cloud architecture to avoid single points of failure, building redundant systems, and self-monitoring to detect unusual failure rates or affected instances.

Alternatives

To accomplish high-availability goals with bare metal OpenStack, an IT team would have to manually set up clustering and high-availability configurations. That’s complicated and requires specialized domain knowledge.

It’s possible to create a fault-tolerant system with VM-based OpenStack, but doing so relies on hypervisor-level features, such as vSphere HA or Pacemaker/Corosync. Plus, these failover mechanisms are slower than Kubernetes-native pod rescheduling.

KubeVirt-based OpenStack and such OpenStack services rely on VM-based failover processes. That slows recovery times significantly when compared to containerized microservices.

StarlingX

Instead, with StarlingX:

- Kubernetes natively handles container restarts, self-healing, and automatic failover for OpenStack services.

- StarlingX provides distributed cloud capabilities, ensuring OpenStack control plane availability across multiple sites.

- In-service updates and patches reduce downtime and eliminate the need for disruptive migrations.

- The container-based services are fast — tens of milliseconds versus minutes to restart VMs — leading to less downtime and higher service quality.

Scalability and Edge Readiness

Cloud platforms must maintain performance levels to satisfy SLAs. Yet when demand slackens, the extra computing resources are no longer needed; they can be de-allocated to minimize costs. IT admins often juggle a patchwork of solutions to balance between system responsiveness during peak demand and the costs of overprovisioning.

Alternatives

To scale cloud environments with bare metal deployments, IT staff has to do manual provisioning and reconfiguration. That’s feasible in some situations, but less suitable for dynamic cloud environments.

With VM-based OpenStack, scaling means provisioning additional VMs, which adds management overhead and delays.

Similarly, scaling OpenStack services via KubeVirt adds another layer of orchestration. That’s slower than native Kubernetes-managed containers.

StarlingX

StarlingX approaches scaling somewhat differently:

- It is designed for distributed cloud environments, and the architecture assumes that OpenStack control and compute nodes may be spread across multiple remote locations.

- OpenStack services based on Kubernetes are dynamically auto-scaled. That ensures efficient resource utilization and minimizes human involvement.

- StarlingX integrates edge features including low-latency networking, enhanced security, and real-time operating system support. The functionality is there and waiting to be used.

The Best Approach

With its use of Kubernetes-native containerization, StarlingX optimizes OpenStack for performance, scalability, high availability, and operational simplicity. In contrast, traditional VM-based OpenStack, bare-metal deployments, and KubeVirt-based solutions introduce unnecessary complexity, resource overhead, and maintenance challenges.

For enterprises and service providers looking to modernize their cloud infrastructure, replace VMware, and deploy OpenStack efficiently, StarlingX’s containerized OpenStack approach is the superior choice.

As organizations continue to modernize their IT infrastructure, the need for scalable, highly available, and easily managed cloud solutions will only grow. Migrating from proprietary virtualization solutions such as VMware to OpenStack offers significant benefits, including cost savings and increased flexibility.