From Edge to Enterprise: The StarlingX Advantage

Learn what StarlingX is, what it’s suitable for, and how it can address your company’s cloud computing needs.

In some environments, millisecond delays can prevent critical responses or ruin user experiences. That is particularly true for edge computing and IoT, 5G, and AI applications. The StarlingX cloud solution has addressed those unique challenges in massive deployments for years, with capabilities proven not just at the edge but also at the core and in the enterprise.

StarlingX is primarily an open source cloud computing platform that provides a comprehensive software stack for deploying and managing distributed cloud infrastructure. Developed under the umbrella of the OpenInfra Foundation (formerly called the OpenStack Foundation), StarlingX combines several open source technologies to create a robust, scalable, and highly available solution for edge computing use cases.

Edge computing reduces communication latency by putting computing resources at the network’s edge, close to where the action occurs, such as next to the sensors that collect data or where end users interact with the system. It’s ideal for mission-critical applications that have to operate in real time; require high availability; and would be sensitive to the latency that might result from having to communicate with systems that are far away, in the cloud. Edge computing minimizes the time required to manage smart grids for power distribution, monitor industrial machinery, deliver videos or games, and ensure high-frequency trading. Because edge computing means creating highly distributed systems with potentially thousands of nodes, managing these systems becomes a challenge.

Many applications haven’t merely benefited from edge computing; they require it. For example, 5G telephony demands the speed available at the edge to keep callers and Internet users happy, as well as five-nines uptime for the service.

What Is StarlingX?

StarlingX is a cloud platform created to address the need for low latency, high availability, and ease of management for highly distributed systems. This is why, for example, Verizon deployed thousands of 5G cell sites using StarlingX for its distributed cloud infrastructure.

As it turns out, those attributes also make StarlingX a good fit for enterprise cloud computing.

StarlingX was founded by Intel® and Wind River®, under what was then the OpenStack Foundation, as a fully open source project. StarlingX 1.0 was released in 2018 under the Apache 2 license. StarlingX 10 was delivered in February 2025. Its key contributors have included Wind River Systems, Intel, China Unicom, T-Systems, Verizon, and Vodafone. Today, the platform is used in production deployment by customers with tens of thousands of compute nodes.

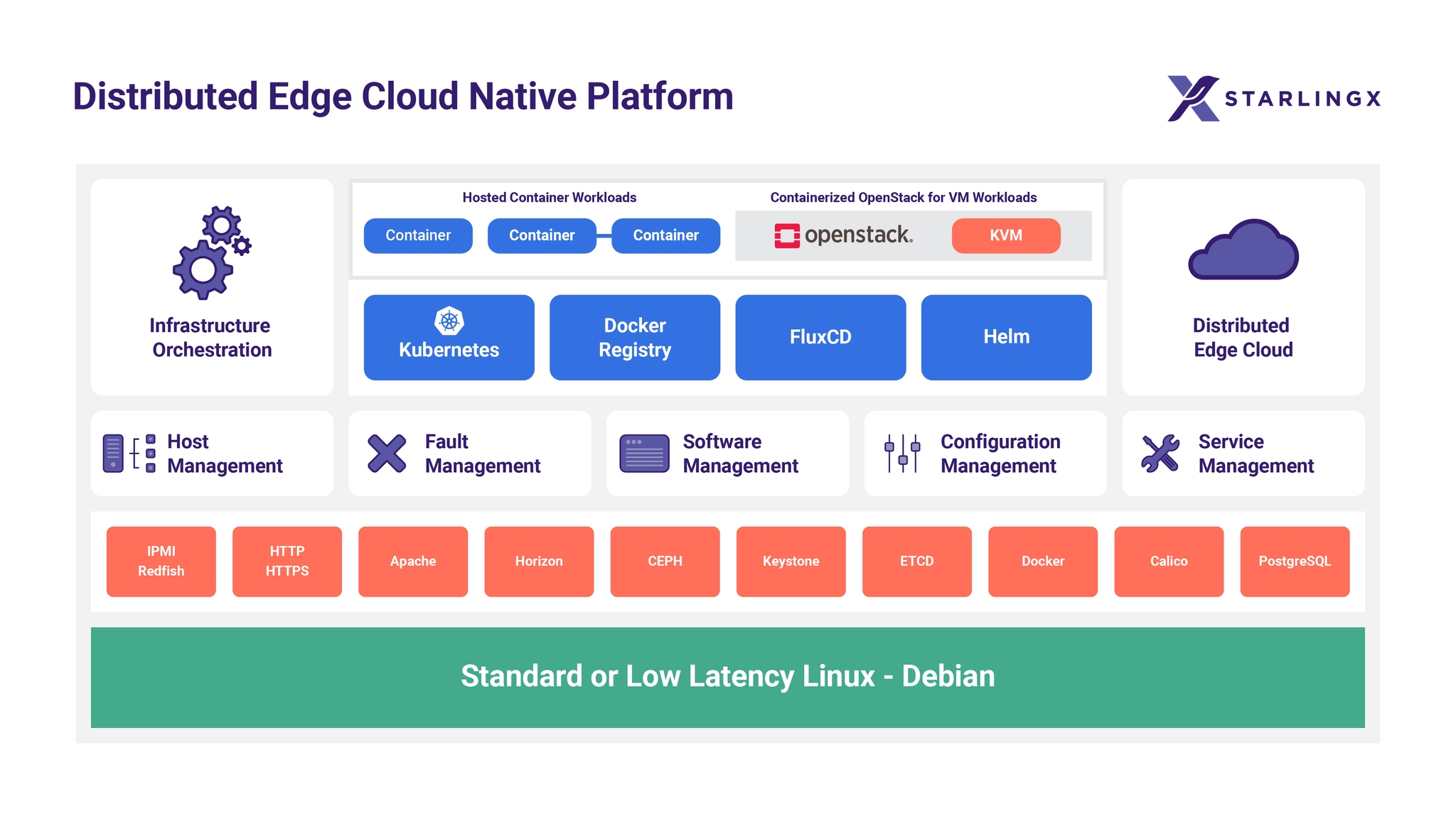

StarlingX integrates multiple open source components to form a complete distributed cloud stack, with ultra-low latency, a small resource footprint, and distributed computing deployment. The components include:

- Linux OS: Hardened for mission-critical environments

- Container runtime: Typically containerd underneath Kubernetes

- Kubernetes: Core orchestration layer for running containerized workloads

- OpenStack (optional): For virtual machine (VM) workloads, when needed alongside containers

- Ceph: Provides high-performance distributed storage for container and VM workloads

- High-speed networking tools: Including Data Plane Development Kit (DPDK), Single Root I/O Virtualization (SR-IOV), and Open vSwitch with DPDK (OVS-DPDK)

StarlingX stands out from its rivals because it provides a fully integrated cloud stack optimized for distributed computing. It is a deployment-ready distributed cloud platform that combines multiple open source technologies — everything needed from the Linux OS to orchestration — in one continuously integrated solution. This integration saves users the effort of piecing together individual components.

This gives administrators a cloud they can deploy on configurations ranging from a single server to up to 5,000 servers. It is suitable for a wide variety of deployment needs, be they edge, core, or enterprise.

StarlingX scales remarkably well. For instance, you can start with a single central cloud (system controller) to manage your entire distributed infrastructure. From there, you can manage up to a thousand edge sites, called subclouds. Each subcloud can be a single-node StarlingX Kubernetes deployment or a complete multi-node cloud configuration. You can combine multiple distributed clouds to create systems with tens of thousands of nodes, or scale down to a single node as appropriate.

You can deploy subclouds in a wide variety of flexible configurations — from “AIO-SX” or “All-in-one Simplex” single nodes to redundant all-in-one configurations to separate control and dedicated worker nodes to dedicated control, storage, and worker configurations. The deployment may scale live from one configuration to the next as demands grow.

To protect the system, you can back up and restore functions for up to 250 cloud backups in parallel and perform 100 edge cloud restores from a single cloud controller.

In addition, StarlingX has services explicitly developed for typical edge computing requirements. These services address edge computing’s unique challenges, such as limited physical access to sites, the need for rapid fault recovery, and streamlined operations:

- Configuration management: Enables auto-discovery and configuration of new nodes, which is crucial for managing multiple remote sites

- Fault management: Allows you to set, clear, and query custom alarms and logs for infrastructure and virtual resources

- Host management: Provides lifecycle management for host machines, including failure detection and automatic recovery

- Service management: Ensures high availability through redundancy models and active/passive monitoring

- Software management: Facilitates rolling upgrades and updates across all layers of the infrastructure stack

A Distributed Model

Plenty of organizations have decentralized infrastructure. Imagine a virtualized infrastructure distributed across a nationwide energy grid, a 5G network, multiple hospital sites, or even within an industrial robotics factory with hundreds of cells. Everyone wants reliability, efficiency, consistency, and centralized control.

StarlingX’s multi-region, multisite orchestration model helps make that happen. The platform allows administrators to provision and manage a fleet of independent subclouds, each of which can be centrally managed and can run autonomously in its region. The result is fault isolation, operational autonomy, and resiliency, so workloads can remain online even in the event of inter-site connectivity loss. Each subcloud maintains its own control plane, tailored to its size and resource availability, while still participating in global software management, monitoring, and lifecycle orchestration managed from the central region.

This distributed model works well for use cases in which low-latency processing, regulatory data locality, or operational independence are required across multiple sites, while still needing a unified deployment and maintenance strategy. By using both centralized and decentralized workload placement, organizations can run applications where they make the most sense, without sacrificing the advantages of a tightly integrated cloud platform. With centralized orchestration tools, operators can roll out software updates, monitor alarms, and apply policy-based configurations to the entire distributed system or to specific sites.

StarlingX abstracts the complexity typically associated with managing distributed infrastructure, combining centralized governance with local autonomy. Its architecture ensures consistency across locations, simplifies DevOps workflows at scale, and enables infrastructure teams to meet the demands of highly distributed, always-on operations.

Speed Advantage

One of StarlingX’s primary advantages is its ultra-low latency, which it achieves using a combination of technologies. With real-time Linux kernel support, StarlingX improves kernel scheduling and reduces application latency. It also uses a low-latency performance profile for worker nodes optimized for applications with stringent latency constraints. And it supports the Open vSwitch with Data Plane Development Kit (OVS DPDK) to reduce network packet delivery latency.

StarlingX permits users to isolate CPU cores for critical low-latency applications, which helps them run efficiently. It supports up to 5,000 manageable All-in-One Simplex (AOI-SX) subclouds, enabling distributed low-latency cloud deployments. Finally, it includes enhanced Precision Time Protocol (PTP) clocking features, enabling sub-microsecond accuracy for timing-critical applications such as 5G, financial trading systems, power and utility grids, industrial automation and robotics, and aerospace and defense.

Of course, speed and low latency are important, but high availability is critical. StarlingX’s architecture is based on several high-service uptime features and design principles. Among them is a robust fault management system that constantly monitors the health of all nodes in the cloud, including host heartbeats over all network interfaces, platform resource usage (e.g., CPU, memory, and disk), and critical processes and Baseboard Management Controller (BMC) hardware sensors.

If something goes awry, StarlingX’s fault management system reports state changes to StarlingX’s upper layers for impact analysis and recovery. When an issue is reported, the system automatically triggers the migration of active instances to healthy nodes. Migration can be done both locally and to other physical locations if need be.

For the most part, StarlingX can and does keep itself up and running. The program implements automated recovery procedures for failed hosts. And it also supports active/active or active/standby high-availability services across controller nodes.

Administrators aren’t kept out of the loop. StarlingX classifies alarms by severity and lets system operators monitor things via an active alarm list and global alarm banner. These are accessible via a command-line interface, graphical user interface, and REST API.

To ensure that its services are available when you need them, StarlingX also uses several high-availability redundancy models. These include the N+M redundancy model, the N across multiple nodes redundancy model, and the 1+1 HA Controller Cluster model. Above this subcloud level, StarlingX deploys geo-redundancy for distributed clouds using the 1+1 Active-Active Redundancy model for system controllers, which supports up to a thousand subclouds.

Comprehensive Security Baked In

StarlingX employs a comprehensive set of security features. This starts with infrastructure security, which includes UEFI Secure Boot on StarlingX hosts, as well as signed ISOs, patches, and updates. It also includes authentication and authorization on all interfaces; audit logging for operator commands and authentication events using Linux Auditd; and OpenScap modules. StarlingX security also includes AppArmor support for confining programs to limited resources. Last but not least, it includes firewall functionality and Istio service mesh for Kubernetes-based networks and policy security deployment and management.

For containers and applications, StarlingX comes with cert-manager for automated TLS certificate management, secure secret management using HashiCorp Vault, and Role-Based Access Control (RBAC) for Kubernetes. You can lock down virtual machines (VMs) with Kata Containers. StarlingX also encrypts Kubernetes secret data at rest.

Beyond the Edge

StarlingX technology is good for more than a pure edge play. It’s suitable as a complete enterprise infrastructure.

StarlingX delivers a fully cloud-native, Kubernetes- and container-based architecture for the development, deployment, operations, and servicing of distributed edge networks at scale. It addresses complex challenges in deploying and managing a cloud-native infrastructure for core-to-edge distributed cloud networks. For example, Wind River recently demonstrated the premise with its assistance in helping Boost Mobile move from VMware to Wind River Cloud Platform, a StarlingX distribution. In this case, StarlingX was used to make the vendor changeover for every cloud site, including enterprise, core telecommunications infrastructure, and even an Open Radio Access Network (O-RAN) that provides 5G voice and data to millions of Americans.

StarlingX also orchestrates new service deployment across a complex cloud topology, with end-to-end automation and zero-touch provisioning. In addition, Cloud Platform analytics technology can help manage a distributed cloud network effectively, using AI/ML-generated insights for intelligent and automated decision-making.

As Paul Miller, Wind River's CTO said, “Don’t pigeonhole StarlingX as a pure edge play. Every single piece of cloud infrastructure in the Boost Mobile network, from the core to the center, over 20,000 sites, is all based on StarlingX.”

Using StarlingX Today and Tomorrow

Many companies already use StarlingX. In addition to the aforementioned 5G for telecom scenarios, the platform’s uses include smart manufacturing and automation systems, autonomous vehicles, low-latency augmented reality and virtual reality applications, and high-bandwidth video delivery. Expect more.

In the meantime, StarlingX has already gained traction among telecom operators, enterprises, and organizations. Notable users include T-Systems, which deploys StarlingX for edge computing use cases; Vodafone, which uses StarlingX for edge and 5G applications; and Xunison, where StarlingX is part of its smart home technologies.

The platform’s flexibility allows StarlingX to be deployed on a wide range of hardware configurations, from single-server setups to large, distributed clusters. This versatility makes it attractive for both small-scale edge deployments and large telecom networks.

For all of its edge strengths, don’t forget that StarlingX is more than just an edge cloud. Its improvements in its last few versions, including the just-released StarlingX 10, and its incorporation of other open source cloud programs, such as Kubernetes, have made it suitable for any cloud-native computing uses.

Edge computing is growing in importance. StarlingX is positioned to play a crucial role in this evolving landscape. Wind River is actively contributing to the open source project’s success, with hundreds of developers contributing code to it every day. Customers who want a commercially supported distribution of StarlingX with updates, service, and support are invited to explore Wind River Cloud Platform and Wind River OpenStack in more depth.