RIP OVDK: High-Performance Virtual Switching Available Now in Commercial Telecom Platform

Intel recently announced that they have discontinued the OVDK virtual switch (SDNCentral article here). This prompted questions from service providers and Telecom Equipment Manufacturers (TEMs) who need high-performance, Carrier Grade vSwitch solutions for deployment in commercial networks as part of their Network Functions Virtualization (NFV) infrastructure.

There is life after OVDK and no reason for concern.

In this post, we’ll outline how the Titanium Server platform from Wind River provides exactly what is required, in terms of performance, reliability, integration and availability.

We’ll explain how the vSwitch in Titanium Server causes significant improvements in service provider OPEX, which of course is a key business objective for adopting NFV in the first place.

(For anyone not familiar with the history of OVDK, it was announced early in 2013 as an approach to maximizing vSwitch performance for traffic comprising mainly small packets, which is the typical scenario for telecom infrastructure. The OVDK project, based on the Intel® DPDK library, leveraged packet processing in user space to improve switching performance compared to the open-source Open vSwitch, or OVS. Intel has now decided to discontinue OVDK and instead contribute to the OVS project.)

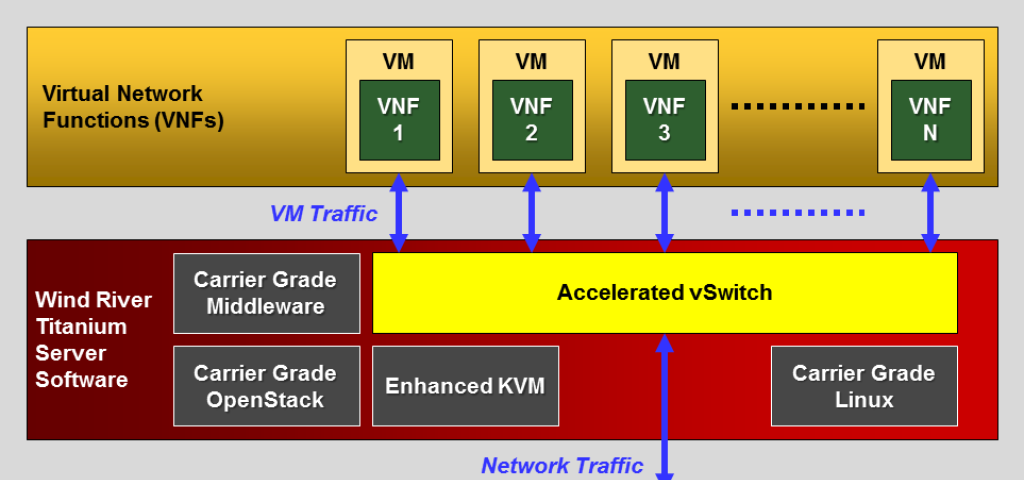

In the NFV architecture, the vSwitch is responsible for switching network traffic between the core network and the virtualized applications or Virtual Network Functions (VNFs) that are running in Virtual Machines (VMs). The vSwitch runs on the same server platform as the VNFs. Obviously, processor cores that are required for running the vSwitch are not available for running VNFs and this can have a significant effect on the number of subscribers that can be supported on a single server blade. This in turn impacts the overall operational cost-per-subscriber and has a major influence on the OPEX improvements that can be achieved by a move to NFV.

Wind River’s Titanium Server platform is a commercially-available solution that addresses this challenge.

Let’s look at an example to illustrate how the Accelerated vSwitch (AVS) in Titanium Server results in big OPEX savings for service providers:

To keep the analysis simple, we’ll assume that we need to instantiate a function such as a media gateway as a VNF and that it requires a bandwidth of 2 million packets per second (2Mpps) from the vSwitch. For a further level of simplification, we’ll assume that we’re going to instantiate a single VM, running this VNF, on each processor core. So we need to calculate how many VMs we can actually instantiate on our server blade, given that some of the available cores will be required for the vSwitch function.

As the reference platform for our analysis, we’ll use a dual-socket Intel® Xeon® Processor E5-2600 series platform (“Ivy Bridge”) running at 2.9GHz, with a total of 24 cores available across the two sockets. All our performance measurements are based on bidirectional network traffic running from the Network Interface Card (NIC) to the vSwitch, through a VM and back through the vSwitch to the NIC. This represents a real-world NFV configuration, rather than a simplified configuration in which traffic runs only from the NIC to the vSwitch and back to the NIC, bypassing the VM so that no useful work in performed.

In the first scenario, we use OVS to switch the traffic to the VMs on the platform. Measurements show that each core running OVS can switch approximately 0.3Mpps of traffic to a VM (64-byte packets). The optimum configuration for our 24-core platform will be to use 20 cores for the vSwitch, delivering a total of 6Mpps of traffic. This traffic will be consumed by 3 cores running VMs and one core will be unused. We can’t run VMs on more than 3 cores because OVS can’t deliver the bandwidth required. So our resource utilization is 3 VMs per blade.

What if we replace OVS with Titanium Server’s Accelerated vSwitch (AVS)? We can now switch 12Mpps per core, again assuming 64-byte packets. So our 24-core platform can be configured with 4 cores running the vSwitch. These deliver a total of 40Mpps to exactly meet the bandwidth requirements of 20 VMs running on the remaining 20 cores. Our resource utilization is now 20 VMs per blade thanks to the use of the AVS software optimized for NFV infrastructure.

From a business perspective, increasing the number of VMs per blade by a factor of 6.7 (20 divided by 3) allows us to serve the same number of customers using only 15% as many blades as when OVS was used, or to serve 6.7 times as many customers using the same server rack. In either case, this represents a very significant reduction in OPEX and it can be achieved with no changes required to the VNFs themselves.

The scenario above (2Mpps per VM, one VM per core, dual-socket platform) probably isn’t representative of your specific application needs. But it’s straightforward to recalculate the savings for any given set of requirements. You just need to figure out the optimum balance of vSwitch cores and VM cores for the bandwidth you need, minimizing the number of unused cores. Please feel free to contact us and we’ll be glad to run the numbers for you.

Next, let’s briefly talk about reliability.

As well as the performance advantages described above, Titanium Server’s AVS provides the Carrier Grade features that represent critical requirements for live telecom networks, such as:

- ACL and QoS protection, providing protection against DoS attacks and enabling intelligent discards in overload situations.

- Full live VM migration with less than 150ms service impact.

- Hitless software patching and upgrades.

- Link protection with failover in 50ms or less.

- Fully isolated NIC interfaces.

We often talk to service providers and TEMs who are considering the use of either PCI Pass-through or Single-Root I/O Virtualization (SR-IOV), two approaches that have been developed to boost switching performance. Neither of these approaches, however, delivers the kind of Carrier Grade features listed above. This prevents service providers from providing the network reliability that is required by telecom customers, namely six-nines (99.9999%) infrastructure uptime.

With AVS now available as part of Titanium Server, there’s no need to forsake these critical Carrier Grade features in order to meet performance targets. AVS delivers performance that is equivalent to or better than either PCI pass-through or SR-IOV, while at the same time enabling service providers to achieve the telco-grade reliability as they progressively deploy NFV in their networks.

So, there’s no need to mourn the passing of OVDK. Through the Accelerated vSwitch incorporated in Titanium Server, service providers and TEMs can achieve best-in-class virtual switching performance while also ensuring Carrier Grade reliability in their networks. All based on a solution that’s available now and supported by a partner ecosystem of industry-leading NFV companies.