What is Needed for a Modern User Interface?

I am astounded at the transformation of the user interface in recent history. The latest trends including facial recognition for authentication, gester technology both visually and with accelerometers in the device to describe a symbol to the device for authentication. But each time these innovations come about, we should ask the question: Is it all really necessary?

As we look into the history of user interfaces on embedded devices, there were some clear cause and effect situations. Take for example the IMSAI personal computer that predates the Apple and IBM PC.

While this was the “cool” factor of its day, each switch and LED had dedicated meaning. And the physical switches meant you knew what state the switch was in before one toggled in an instruction. Intuitive, no. Informative, yes, if you know how to use it. And once you knew how to use it, it was a very effective way of programming the computer directly with machine code. But the days of the physical toggle switches and Red LEDs is long since past and user interfaces have evolved.

You likely have all heard the stories of children walking up to a TV and expecting it to behave like an iPhone by touching the screen and expecting it to scroll to the next channel.

Some of these novel ideas are here to stay and others will go the way of the IMSAI front panel. The question remains, what is right for my embedded design.

Touch is a great example. Touch makes sense when the information you are displaying size and dimensions wildly. The intuitive way a user can zoom into an area is much more quickly defined with a touch screen and can even use some of the screens real estate for user interface buttons, eliminating the need for external buttons. This might save you on your bill of materials.

Yet others prefer a more physical approach and want to use a physical button to press. As in the case for an AED (Automatic External Defibrillator). Ease of use precludes a screen, and only has audio commands and a large red button.

The cost enters in as well. The larger the screen, and touch interface, the more costly the device is to manufacture, and you may be able to use a mouse for navigate and some discrete buttons for the ease of use.

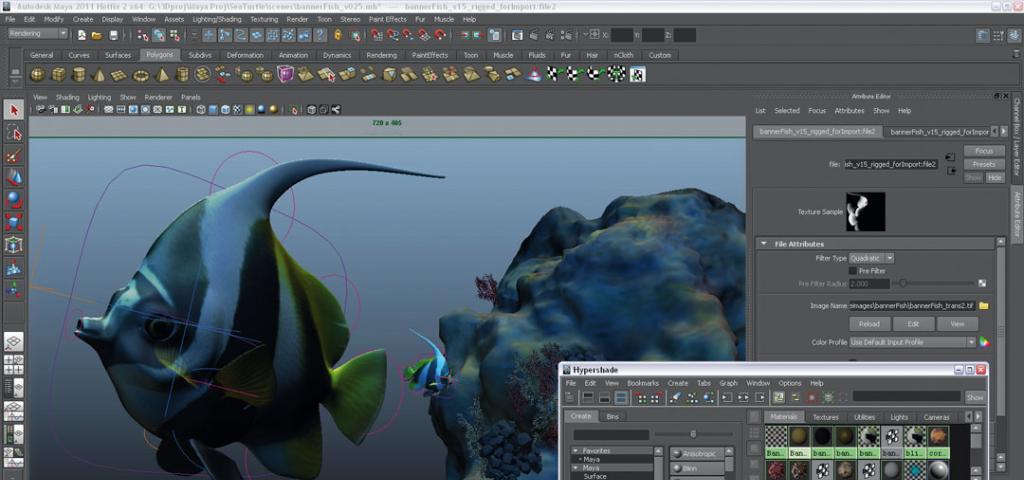

Usability is a key factor in all user interfaces. With the GPU acceleration available in modern SoCs, we have a 3D user interfaces at our finger tips. We can create stunning user interfaces that scroll effortlessly, have ripples as if on water or simulate entire environments. This can be developed on a framework like the Qt environment and can create visually stunning user interfaces:

But does this help in the Industrial control room?

Mid range 2D graphics lowers the cost, simplifies the design, and achieves a feature right UI design. Un-accelerated graphics means the user interface is rendered using the CPU with only a frame buffer. This can only be accomplished if the user interface does not contain much animation, and if there is bandwidth available on the CPU. Several questions to ask are: Does the user understand intuitively what the user interface is telling them, and how they should interact with it? If so, then the user interface is doing its job.

The key is to keep the user interface simple, and elegant. This means use the technology that is readily available at a minimal price point and use a graphical artist to create visually stunning elements for the user interface. This will address your customers concerns of ease of use, lower your BOM costs, and decrease your time to market.

This week at IDF, Wind River is demonstrating VxWorks data visualization with graphics, touch, and user interface for the Internet of Things. The demo highlights the tight integration between OS and graphics, native graphics support on a wide range of hardware, and <500 KB driver and software OpenVG stack. If you’re at the show come to booth #200 to check it out!

For additional information from Wind River, visit us on Facebook.